It’s Alive!

It’s alive. And you need to see this in the upcoming OpenADR Webinar:

📅 Date: Thursday, January 29th 8AM US Pacific Time

🔗 Register now: [ link ].

We’re not talking vaporware or slideware. We’re talking a live, working demo of something that’s never been done before, and it’s going to change how demand flexibility and smart homes work forever.

The Problem Everyone’s Been Ignoring

Matter gave us interoperability. Home Assistant gave us open-source control. But they all still force developers and manufacturers into predefined device types. Light. Switch. Thermostat. Lock.

Your innovative new device that’s part heater, part dehumidifier, part air purifier? Good luck finding which category to shoehorn it into. And forget about creating a “Peak Event Optimizer” service that coordinates across devices, that’s not even a “device type.”

Enter NucoreAI: The First Truly Typeless Open-Source Smart Home Platform

🔹 No device types exist. Period. A Zigbee light bulb, a demand response service, and a robot assistant manager all use the exact same pattern: they describe their capabilities naturally, and the platform understands them.

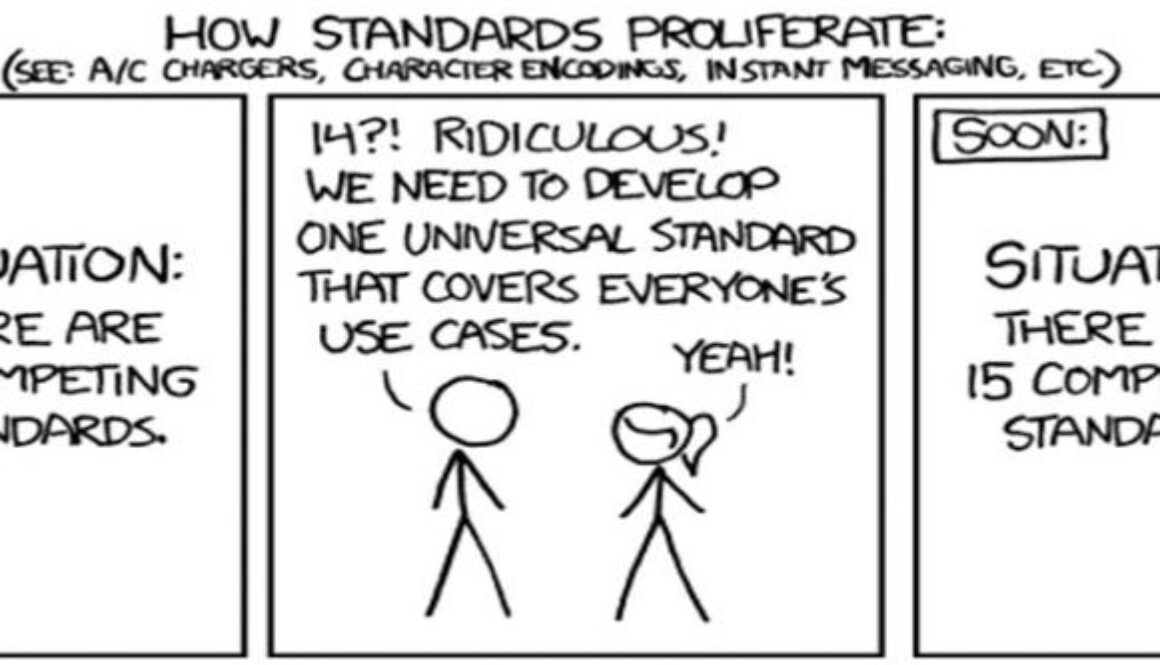

🔹 No standards battles. Use whatever protocol you want (Zigbee, Z-Wave, WiFi, BACnet, OpenADR). Your plugin abstracts it. The platform doesn’t care.

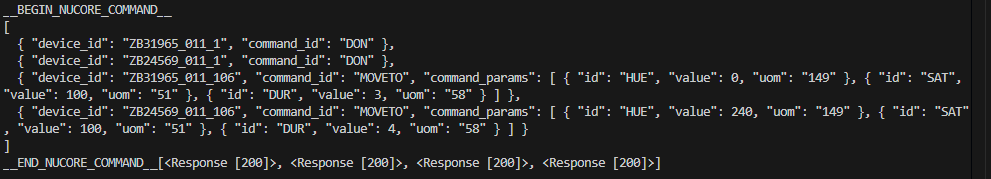

🔹 Everything describes itself at runtime. Not by conforming to a specification. Not by implementing predefined “clusters.” Devices tell the platform what they can do in natural language: Properties, Commands, Constraints, and AI reads it. All of it.

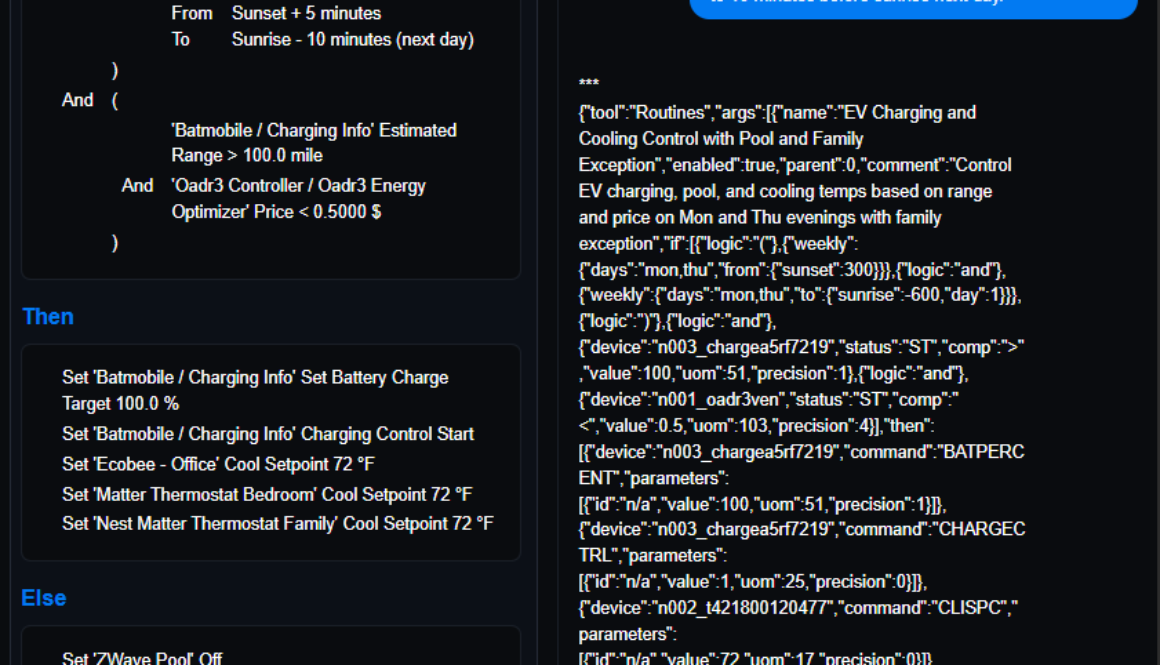

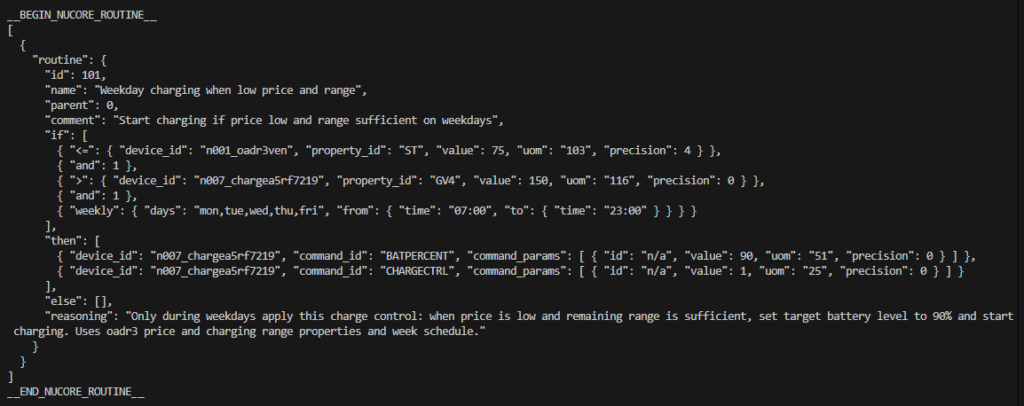

🔹 AI interprets. Platform executes. You talk naturally: “Set all cool temps to 72 on weekdays when I’m home in the summer months.” AI creates the execution plan. The platform validates it against device constraints. Then, and only then, it executes. You get intelligence without losing control.

Why This Is Different

Home Assistant? Amazing, but still built on device types. Matter? Industry standard, but requires certification for predefined categories. NuCoreAI? Genuinely typeless. A service plugin is indistinguishable from a device plugin. The platform orchestrates across ALL of them: no special cases, no exceptions.

See It Live

I’ll be demonstrating eisyAI at the OpenADR webinar with real demand response scenarios. Real devices. Real natural language. Real orchestration across devices AND services. Something that literally doesn’t exist anywhere else.

This isn’t a proof of concept. It’s working. It’s alive. Come see it.

- Command/control/monitoring of one or many devices using English as if you are talking to a human

- Creation of complex conditional logic programs right in front of your eyes

- Real capabilities, real devices, real scenarios

- Live Q&A

📅 Date: Thursday, January 29th 8AM US Pacific Time

🔗 Register now: [ link ].

Open source. MIT licensed. Built by a non-profit foundation. No vendor lock-in. Ever.

The smart home industry has been fighting standards battles for 20 years. We just ended the war.